When Salesforce first released the Data Processing Engine (DPE), I’ll admit—I ignored it. At the time, I was working with Financial Services Cloud (FSC) and relying heavily on Roll-Up by Lookup (RBL) for account summaries. DPE seemed abstract and overly complex compared to the familiar RBL approach.

Years later, while helping a client migrate to FSC, I had to revisit DPE. What started as curiosity turned into one of the most valuable Salesforce learning experiences I’ve had. DPE turned out to be a powerful, scalable tool that bridges the gap between Flows and Apex, allowing admins to handle data transformations at scale—without writing a single line of code.

In this post, I’ll break down what DPE actually does, how it compares to RBL, the challenges I ran into, and the practical lessons I wish I had known sooner.

What Exactly Is the Data Processing Engine?

Salesforce defines DPE as:

“A metadata-driven visual tool that helps you create definitions using various nodes for different types of data transformations.”

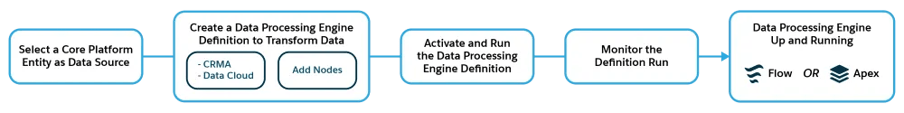

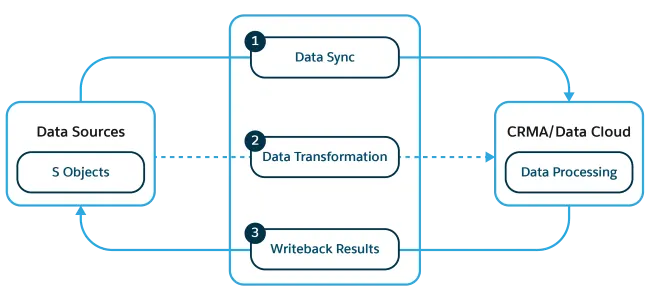

At its core, DPE runs in three stages: define your data sources, transform the data, and write the results back.

That’s a mouthful, so here’s what it really means:

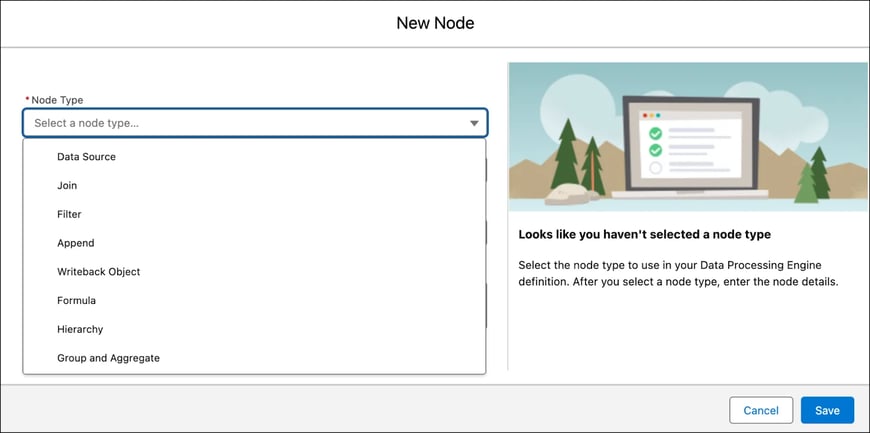

- Metadata-driven: Everything is built through clicks, not code — think Flow Builder, but designed for data operations

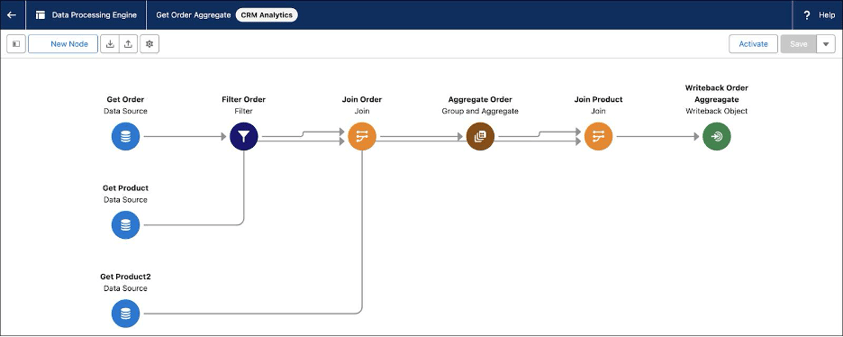

- Visual tool: You drag and drop “nodes” onto a canvas to define how data moves and transforms

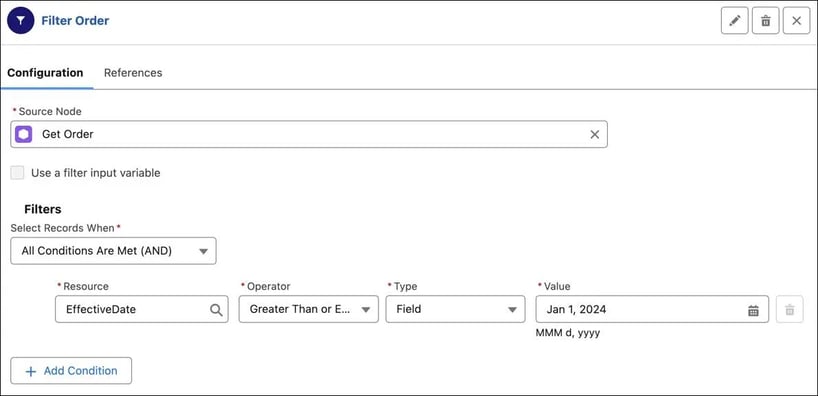

- Nodes: Each node performs a specific task — sourcing, joining, filtering, aggregating, or writing data

DPE essentially lets you build definitions — sets of data instructions that can run manually, on a schedule, or via automation (Flow, Apex, or API). In short, DPE is built for massive-scale data operations that would time out in Flow but don’t justify the complexity of Apex.

From RBL to DPE: The Evolution

When FSC launched in 2016, it included Roll-Up by Lookup (RBL) to aggregate data from Financial Accounts to Households or Persons. It was handy, but limited. RBL only worked with the Financial Account object and couldn’t handle anything custom.

DPE, on the other hand, was built directly into the Salesforce platform, not as part of a managed package. That change unlocked far more power and flexibility. With DPE, you can roll up, join, or transform data across any object, even external data sources.

Think of it like this:

- RBL = “Roll up balances from Financial Account to Household”

- DPE = “Roll up anything to anywhere, with conditions, joins, and logic”

Real Client Scenarios: Where DPE Shines

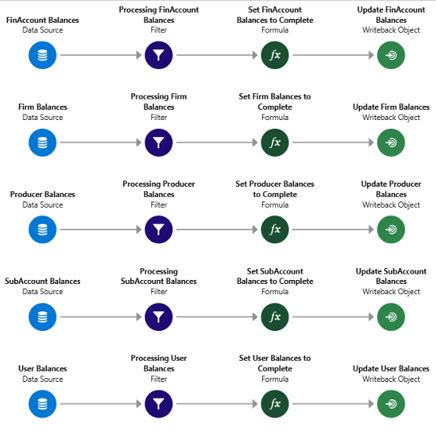

When I first implemented DPE, my client’s goal sounded simple: roll up financial data. The reality was more complex — data existed at multiple hierarchy levels and needed to roll up differently based on record types. Here’s how DPE handled each case:

1. Data Roll-Up (Classic Summaries)

Financial data lived in a custom object several levels below Account. We needed to aggregate these values up to the parent record.

Why DPE worked: RBL only supported Financial Account → Account roll-ups, but DPE can aggregate from any object

2. Record Roll-Up (Creating Summary Records)

We had recurring financial transactions stored in a lower-level object. The requirement: create summarized “snapshot” records at each level in the hierarchy to report historical data.

Solution: DPE definitions generated new child summary records with aggregated data for each level

3. Record Comparison (Historical vs Current)

We needed to compare two data sets from the same source to identify which records were “Current” and which were “Historical.”

My favorite discovery: Using Join nodes, I could compare two versions of the same data — no Apex required

4. Special Aggregation (Dynamic Targets)

In one case, certain transactions needed to roll up to an Account without a direct relationship.

For example, if a transaction had “Company A” as a picklist value, it needed to aggregate under the Account record for “Company A”

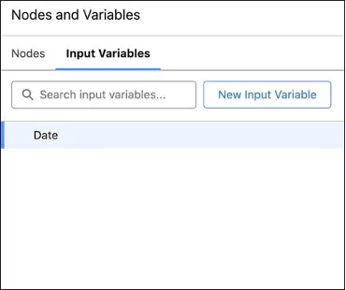

The trick? I created a DPE Definition with two input variables:

- A picklist value (e.g., “Company A”)

- A target Account ID

Instead of creating ten separate definitions for each company, I leveraged a scheduled Flow to call the same DPE Definition multiple times — once for each picklist/Account pair. Each run passed in a different set of variable values, much like how you’d reuse a subflow with different inputs.

Building a Definition: What I Learned the Hard Way

Getting started with DPE wasn’t smooth. Even with years of Flow experience, the logic model is completely different. Here are the biggest lessons I learned:

1. Logic Order Is Implicit

One of the first challenges I ran into with DPE was understanding how it determines the order of execution.

In Flow Builder, everything connects to a single Start node — you control exactly what runs and when. DPE works differently. Each Data Source node acts as its own starting point, and you must define all of them before building the rest of your Definition. Once you add more than one Data Source, you can’t dictate which one runs first — DPE decides.

You do, however, have two ways to influence sequence:

- Node connections: Control the order of transformations by how you link nodes together

- Writeback priority: Assign a numeric order to each Writeback node to determine which executes first

Because multiple Data Sources can exist in one Definition, you can also create parallel data flows that have no direct relation to each other. Whether you design one large, complex Definition or several smaller, modular ones is up to you — DPE will execute every branch you build.

Tip: Use Writeback node order values to define when each output executes.

2. No Conditional Logic

Unlike Flow, the Data Processing Engine doesn’t support conditional paths or “if/else” logic. When a Definition runs, it executes every node in the process — no exceptions.

You can branch connections to perform multiple transformations in parallel, but those branches all run regardless of conditions.

If you need true conditional behavior (for example, only running certain definitions when criteria are met), handle that logic in the Flow or Apex that triggers the DPE Definition.

3. Flat Data Model

Data in DPE is represented as rows and columns, not as Objects. You can join completely different data sets without worrying about lookup relationships, but it also means you lose type-specific helpers.

4. Visual Layout Is Temporary

You can freely drag and arrange nodes across the DPE canvas, just like in Flow, but don’t waste the effort. As soon as you save your Definition, DPE automatically resets the layout based on its own logic. Focus on function, not aesthetics.

5. No Simulated Debug or Rollback

Unlike Flow, the Data Processing Engine doesn’t offer a debug or rollback mode. When you run a Definition, it commits real changes to your data — no simulation or safety net.

Until Salesforce adds a rollback option, I recommend testing with filters that limit the number of records processed. This keeps it running faster, safer, and easier to troubleshoot.

6. No Built-In Versioning

DPE doesn’t include built-in version control like Flow does. You can use Save As to clone a Definition, but there’s no native version history or rollback.

To manage versions manually, clone and rename each iteration (e.g., DefinitionName_V1, V2, etc.). This simple habit is critical for complex builds — especially since the DPE builder can still be a bit buggy.

Executing and Monitoring

There’s no auto-trigger built into DPE, so you’ll need to call your definitions manually or through automation:

- Manual: Run from Setup → Workflow Services → Data Processing Engine

- Scheduled Flow: Call your Definition by name

- Apex or API: For large-scale or on-demand runs

To make a Flow wait until the DPE run finishes, configure a Wait element that pauses until the workflow service completes.

Common Pitfalls & Workarounds

Here are the issues I ran into — and what I’d do differently next time:

- Column Renames Break Everything: If you rename a column in a Data Source, every downstream node using it must be updated manually

- Cryptic Errors: When DPE fails, error messages aren’t descriptive. Sometimes, recreating the Definition is faster than debugging

- Performance Issues: Large Definitions can get buggy. Keep logic modular

- Rebuild Strategy: For big changes, clone → modify → test small data set

My Advice for Anyone Starting with DPE

If you’re diving into DPE for the first time, here’s what I’d emphasize:

1. Plan Before You Build

Sketch out your data flow. Know your source, transformations, and destinations before dropping your first node.

2. Version Everything

Clone before editing. DPE doesn’t track versions, and bugs can corrupt a Definition.

3. Think Small

Break large data transformations into multiple smaller Definitions. It’s faster to test and easier to maintain.

4. Start Simple, Then Expand

Once you understand the pattern — Data Source → Transform → Writeback — you’ll start seeing endless possibilities.

Final Thoughts

The Data Processing Engine might look intimidating at first, but once you understand its structure, it becomes an admin’s secret weapon. It’s more powerful than Flow, less complex than Apex, and capable of handling data scenarios that used to be impossible without code.

If you’re in Financial Services Cloud — or any org with complex roll-up and aggregation needs — it’s worth the time investment. My first DPE project started as a simple roll-up request. It ended with ten Definitions and a new appreciation for how Salesforce continues to close the gap between clicks and code.